The value of

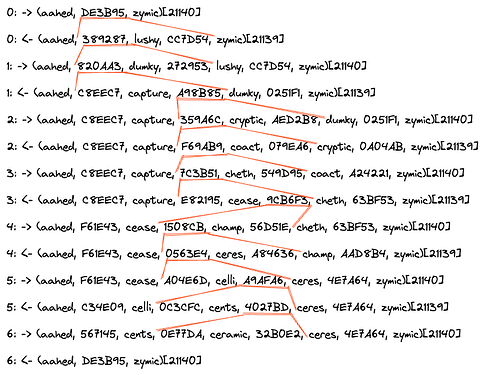

(prevTimestamp, eventHeight)is that it keeps the novelty together.

I expect this is very important to keep new events adjacent in the key space as it means we can use split keys around recent events, thus enabling fast sync on new events which is going to be the most common action by far.

So I concur we want to include both prevTimestamp and eventHeight in the event id.

However I still have some questions of the burden this places on nodes to generate event ids.

Do we need to ensure eventHeight is unique? Meaning if we have concurrent writes to a stream (on the same node or different nodes) if nodes choose the same eventHeight because they haven’t seen the other concurrent event what happens? The event CID will make sure the two events have unique event ids. Is there a process that eventually mutates on of the events to come after the other? Or is the same eventHeight a feature in that in clearly shows a branch in the stream? We don’t need to solve the conflict resolution problem here but we need to ensure that event ids are amenable to conflicts resolution strategies.

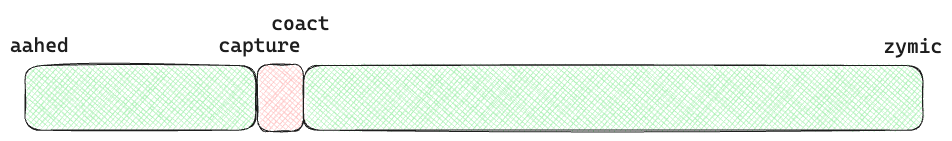

As an aside I was thinking about another small cost of including (prevTimestamp, eventHeight) in that the event id is longer. My assumption is that so long as an event id fits in a CPU cache line or two the size doesn’t really matter that much.

Reading the spec it seems we have about 70 bytes. If we can get that down to 64 bytes then we can fit an entire event id in a single CPU cache line. If we use @jthor 's suggestion to use only 4 bytes from the init-event-cid then that takes us down to 66 bytes. We could also drop the cbor structure or use varints more aggressively to get this down to under 64 bytes quite easily. Or we could just use two cache lines and not try and get down to one. In either case my expectation is that we will likely want to pad the size of these events to an entire cache line to take advantage of SIMD for comparisons etc. All of this is premature optimization ![]() so we should run some experiments before adding complexity to the event id structure.

so we should run some experiments before adding complexity to the event id structure.

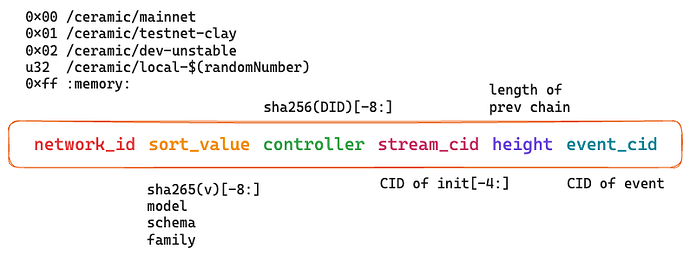

eventIdBytes = EncodeDagCbor([ // ordered list not dict

concatBytes(

varint(networkId), // 1-2 bytes

last16Bytes(separator-value), // 16 bytes

last16Bytes(hash-sha256(stream-controller-did)), // 16 bytes

last8Bytes(init-event-cid) // 8 bytes

),

prevTimestamp, // 8 bytes (u64)

eventHeight, // 8 bytes (u64) or maybe less if we use varint

eventCID // 8 bytes

]) // 4 bytes cbor overhead (haven't tested just a guess)