Ceramic currently has a system of timestamping all events that are written to the network called Anchoring. This is achieved by including all events into a big merkle tree and making a transaction to ethereum which includes the root hash of that tree in the transaction payload. The process of anchoring is done by the Ceramic Anchor Service. Right now only one CAS is available on the network and it’s operated by 3Box Labs.

Why anchoring?

So why does Ceramic even need anchoring? There are three main reasons for this (really only two since one is historical). Let’s explore each of them

Key rotation, e.g. 3ID

While 3ID is no longer actively supported in Ceramic, it’s worth mentioning here for completeness, since it was the original reason to introduce anchoring in Ceramic in the first place.

The core problem 3ID was solving was to be able to have a permanent identifier over time, with the ability to change the keys with admin control over that identifier. This required timestaps in order to know which key rotation happened first, e.g. key1 → key2, or key1 → key3. Anchors allowed us to easily pick the key rotation that was anchored earliest.

Automatic OCAP revocation

Our system of OCAPs (object-capabilities, sometimes referred to as CACAOs in our system) doesn’t yet have a way of direct revocation. However, when a user creates an OCAP and delegates to a session key, the OCAP may contain an expiry time. The main functionality that anchors serve today is to make sure that the data created using these OCAPs are timestamped before the expiry time (modulus a grace period).

The main risk this is trying to mitigate is the potential of key loss. If a key is stolen, at least the theif could only use that key for as long as the OCAP is valid. Worth noting is that there currently isn’t any way in Ceramic to explicitly revoke an OCAP right away.

Trustless Timestamps

Besides key management, the main value proposition of anchoring is tamper proof timestamps. This allows developers and users of Ceramic to see when a piece of data was created (more specifically they can know that the data was created before a specific point in time, sometimes referred to as proof-of-publication).

A few questions arises out of this:

- Do current and future developers and users actually need timestamps?

- If yes, is the current approach to anchoring optimal from a cost and efficiency perspective?

- Or would they prefer to have more control over which data and when data is timestamped?

The answer to all of these three questions isn’t super obvious and likely depends a lot on different need for different use cases.

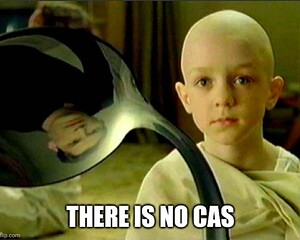

Key revocation without anchoring

Can the key revocation functionality we need be achieved without the need of doing anchoring at all? This would certainly be desireable as it would remove a lot of complexity in the protocol that is involved with running CAS (or the planned rust-ceramic feature self-anchoring). It would also allow us to solve the needs around trustless timestamps from a more product centric perspective, rather than being a side effect of technical constraints.

… Retracted proposal …

Conclusion

Given that key rotation is no longer the goal of Ceramic (since it’s handled on-chain by “smart accounts”), anchoring as it exists today on Ceramic is no longer an optimal solution to OCAP revocation. There is a possibility to retain an alternative way of OCAP revocation event without anchoring.

If we decide to let go of anchoring all together, the question of the trustless timestamp aspect of anchoring can be re approached from a more product centric perspective. Giving users and developers on Ceramic more optinality on if and how they get trustless timestamps.