Discussion for CIP-145: Multiple previous (multi-prev)

Hi! In general, this looks very nice! Posting some thoughts from a first proper readthrough:

Semantics

In my mind, it’s not super clear from the list of definitions whether a data event is considered uncovered if only used as prev in a time event. This is the definition given:

Ais anuncovered eventif there is no event withA’s CID in itsprevfield.

Here is one example of an unclear example:

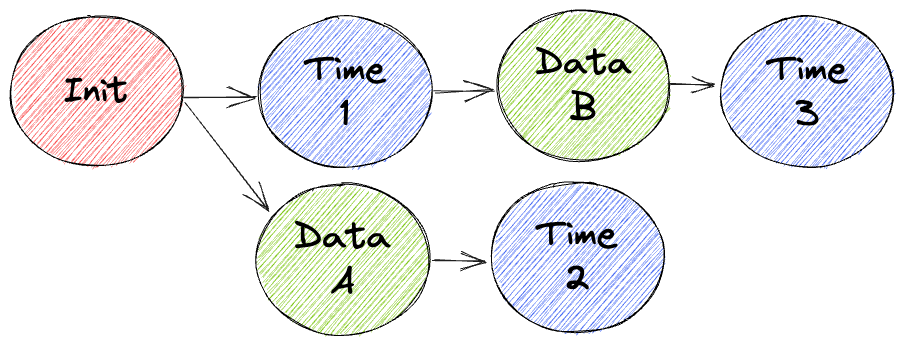

- If a stream is in a diverged state (see events

AandBin fig. 5), we only consider branches that contain valid, uncovered Data Events.

Looking at the graph, there are no uncovered Data Events given the initial definition, as there are time events that use them as prev:

Is an uncovered event the same as a stream tip, or is it explicitly a data event, followed by 0-1 time events, but not any additional data events?

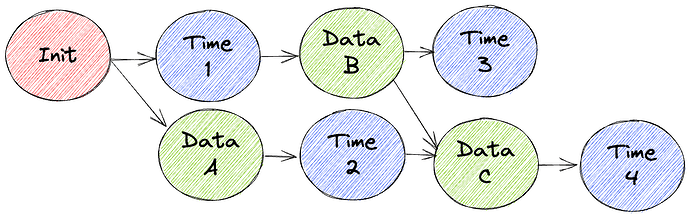

Merge points

When the CIP states “the controller fixes [the problem] with a data event C”, does that mean that trying to create a non-multi-prev data event will fail on reasons of stale state? Or is it up to the controller whether it wants to merge the fork?

Also, I want to verify my understanding of anchor times and hence stream ordering here:

- init @ 1

- data A @ 2

- data B @ 3

- data C @ 4

Multiple uncovered fork events

Consider this property:

- For branches that contain valid, uncovered Data Events, we only consider the first Data Event on a branch after the fork.

What if there are two events? This ties into my other thread re. late publishing attacks (Trace/verifiability of late publishing attacks), but consider I create a checkpoint of two fast data events in a row, they are anchored but not transmitted to the network. Call this branch A.

I later on work on a fork from the init event, building up a series of data events. Call this branch B. Something makes me want to undo my publication, so I transmit the checkpoint events to the network. Will the multi-prev consensus resolution then just merge my first commit from B, into the A branch? Or will it work some other way?

In general, what is the reason for the one-event limit? It feels like there is some particular case it avoids, but I can’t figure it out ![]()

Thanks ![]()

Hi @m0ar,

We’ve finally gotten back to working on this spec, and really appreciate your questions, feedback, and patience ![]()

We’ve introduced two new terms to clarify what we mean here - dominant and non-dominant Data Events.

* A `dominant Data Event` is one that is not covered by another Data Event.

* A `non-dominant Data Event` is one that is covered by another Data Event. This applies transitively through Time

Events.

An event can be “covered” by another Data Event or Time Event that has the former’s CID in its prev field. Dominant/non-dominant Data Events are only categorized as such based on whether they’re covered specifically by another Data Event.

The controller can continue building on any branch, though it is desirable to build on the tip. Stream state aggregation will ignore any Data Events that not covered by the tip.

Multiple events in a row don’t matter for the purposes of conflict resolution. Considering the earliest Data Events on branches after a fork (and their earliest Time Events) gives us more stability in the precedence rules. Nothing that happens on a branch after the fork changes the outcome of the precedence rules, versus flip-flopping on the outcome based on something like longest-log.

The controller creating the merge event can choose which of the prevs to put first, which becomes the base for applying its delta. If there is more than one Data Event in the candidate branches, the multi-prev list will hold the CIDs of the latest event from each branch.

You don’t need a data withholding attack in order to induce this checkpoint behavior. By creating a merge event where the first CID in the prev list is the Init Event and the second is the current tip, you will apply your delta against the Init state. Because you covered the tip, the new Data Event now becomes the tip, and you have effectively rewound the stream state.

We really appreciate your comments. Clarifying these details in the spec has definitely made it more rigorous.

We just put up a PR for our changes. If you’re interested in participating in the review, please let us know your GitHub handle, and we’ll add you to the review.

It’s @m0ar there as well, thanks ![]()

Wouldn’t this just be a new commit that resets all fields to the genesis state? The important question here is whether the history can change, i.e., can I still find the previous version right before this merge when inspecting the log? If so, it’s the same as the controller just pushing a new event where the fields are reset.

If not, it would mean that the controller can always just delete all stream history and create a fully new tip from genesis. This is very problematic for our use case, as we need to be able to inspect the change history of a stream.

Yes, good question.

The Multi-Prev spec, once implemented, will allow historical events to always be a part of the stream history, even if the stream is intentionally wound back. This means that even if a stream author intentionally winds back their stream, historical events will continue to exist as part of the prev chain, as long as nodes don’t garbage collect them.

A stream could potentially also be “compacted” using this mechanism. For example, if a stream becomes large, the author could create a new commit with the CIDs of the current tip as first in the prev list and the Init Event as second. The delta gets applied against the current tip. In this state, the stream gets “compacted” down to 3 events - the Init Event, the Merge Event, and a Time Event. Historical events can (but don’t have to) be garbage collected to reduce storage.

To answer your specific question, this is not quite the same as a new commit that resets all the fields. Because it includes both the Init Event and the current tip, all prior history is still reachable via the multiple previouses.

Multi-prev allows stream authors to legitimately rewind their streams, reducing the length of the log without losing track of historical events.

A plain new Data Event attempting to branch off of the Init Event will be rejected by conflict resolution, as today. The only exception to this is the late-publishing attack, which must be solved by other means.

Can you elaborate on how you imagine querying historical state would work? How could I compute the series of changes from genesis that took the stream to the current tip? How do I practically inspect a log, if it’s no longer linear?

I am also curious on what specifically motivates this intentional stream-rewind feature? Or is it a consequence of the multi-prev design?

From how I understand it, it will be quite hard for us to work with version history for a multi-prev stream. For users, this is always going to be expected as linear. We can jump through (a few) hoops to get there, that’s fine, but verifiable history is a hard requirement for us to be able to use ceramic. Ideally, we’d like to be able to resolve version 3 of a stream (genesis + 2 data events), deterministically.

We’ve made some updates. The protocol’s default behavior will be to place the tip as the first CID in the prev list of any Merge Event.

If the application would like to observe the linear history of the stream, it can track events from the tip to the Init Event by looking at the first CID in the prev list.

If instead the application would like to inspect the entire history of the stream, it can traverse the full DAG back to the Init Event.

In other words, there is a straightforward way to inspect the history, much like the way it is inspected today, i.e. following the tip back to the Init Event.

You’re right - it is just a consequence of the multi-prev design, not the goal.

With the tip CID always being placed in the first slot of the prev list, there should now always be a simple way to inspect the linear history that is not any less efficient or thorough than the current way of inspecting history.

The new mechanism allows (but doesn’t force) applications to be more thorough in their inspection, if so desired.

Cool, that change makes a lot of sense! Sorts out most confusion I think. ![]()