Co-authored with @StephH.

Motivation

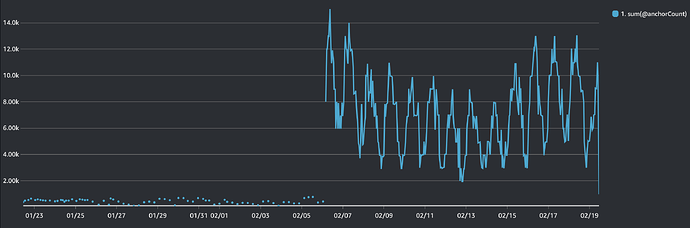

The Ceramic Anchoring Service (CAS) is currently operated and funded by 3Box Labs. It is a centralized service that is both a single point of failure and a bottleneck for scaling the network.

There are already efforts underway to progressively decentralize anchoring in the Ceramic network to remove the community’s dependence on 3Box Labs infrastructure. This, however, is a task that will require several months of discussion, planning, coordination, implementation, and testing.

In order to address our immediate network scaling needs for upcoming partner engagements, this proposal covers a few possible options for scaling the CAS as it works today. This will allow us to scale the network enough to tide us over till full anchoring decentralization is achieved.

Background

The CAS API is a stateless service that ingests anchor requests from all Ceramic nodes on the network, stores them in a AWS RDS database, and posts them to an SQS queue.

The CAS Scheduler reads from the SQS queue, validates and batches the anchor requests, then posts these batches to a separate SQS queue.

A CAS Anchor Worker reads an anchor batch from SQS, create a corresponding Merkle tree, and anchors it to the blockchain. Thereafter, the Worker creates a Merkle CAR file that contains the entire Merkle tree, along with the associated proof and the anchor commits corresponding to anchor requests included in the tree.

Multiple Anchor Workers can run at the same time without conflicting with each other. The only resource that simultaneously running Workers will contend over is the ETH transaction mutex.

The CAS API is also responsible for servicing anchor status requests from Ceramic nodes across the network. It does this by loading Merkle CAR files corresponding to anchored requests and returning a Witness CAR file that includes the anchor proof, the Merkle root, and the intermediate Merkle tree nodes corresponding to anchored commit.

Scaling the API

The CAS API can service a lot more requests than it can handle today merely by running more instances of the API. Since it is a stateless service, scaling it horizontally increases the number of requests that it can handle at any time.

An important consideration here is that an increase in the number of anchor requests that the API handles will likely correspond to an increase in the number of anchor status requests as well. This, in turn, means that the API will need to load Merkle CAR files a lot more frequently.

Another related consideration is that increasing the depth of the Merkle tree (discussed below), will result in significantly larger Merkle CAR files.

Even though we do currently have a mechanism for caching recent Merkle CAR files, additional API instances and/or larger Merkle CAR files will likely have an impact on latency and S3 bandwidth utilization.

The last consideration here is scaling the RDS database in which the CAS API stores anchor requests. This database will need to be scaled to handle the increased number of anchor requests, but this is as simple as a configuration change.

CAS Auth

CAS Auth via the AWS API Gateway, while useful at a small scale for providing our community self-service access to the CAS, has not been able to keep up with the demands of our larger partners. As a result, we have had to repeatedly bypass CAS Auth and provide direct access to the CAS API.

This mechanism can remain in place for the community CAS, but we should consider dedicated CAS clusters for larger partners that do not use CAS Auth. This would also reduce the risk of a single partner’s traffic affecting the CAS API’s ability to service other partners, especially now that we have partners with significant traffic.

Scaling Request Processing

Number of Anchor Workers

The number of Anchor Workers can be increased to process more anchor requests in parallel. This will allow the CAS to process more anchor requests in a given time period.

The main bottleneck here is the ETH transaction mutex. This is a global mutex that prevents multiple Anchor Workers from sending transactions to the Ethereum blockchain at the same time. This is necessary to prevent nonce reuse, which could invalidate some transactions.

The other bottleneck is IPFS. Anchor Workers store intermediate Merkle tree nodes, anchor proofs, and anchor commits to IPFS. They also publish anchor commits to PubSub.

Increase Depth of Merkle Tree

Increasing the depth of the Merkle tree will allow the CAS to include more anchor requests in each batch. This mean that with the same number of Anchor Workers, and without increasing contention for the ETH transaction mutex, the CAS can process more anchor requests in a given time period.

An important consideration here is the size of the Merkle CAR files. Larger Merkle CAR files will require more bandwidth to load and store in S3. Individual Witness CAR files will also be larger, including additional intermediate Merkle tree nodes to represent the deeper Merkle tree. This will require more bandwidth to transfer to the requesting Ceramic nodes.

Using deeper Merkle trees (e.g. 2^20) would also mean storing and publishing an exponentially larger number of anchor commits to IPFS. This is untenable and would likely require us to eliminate the use of IPFS for storing/publishing anchor commits.

Additionally, deeper Merkle trees will result in longer anchor commit validation times on Ceramic nodes. This is something we would need to characterize through testing.

Scaling IPFS

IPFS has been an unreliable component of the CAS. It has been a source of significant operational issues, and has been a bottleneck for scaling the CAS.

The CAS currently stores intermediate Merkle tree nodes, anchor proofs, and anchor commits in IPFS. It also publishes anchor commits to IPFS PubSub.

It would be beneficial to eliminate IPFS from the anchoring process entirely, instead relying on alternative mechanisms for providing Ceramic nodes with anchor-related information.

This is a significant change that would require a lot of coordination and testing. It also assumes that Ceramic’s anchor polling mechanism is rock-solid, and can become the sole provider of anchor information to Ceramic nodes via the CAS API.

Scaling the Scheduler

At a high-level, the CAS Scheduler is already setup for processing a very large number of requests per second.

The only consideration there is how its batching mechanism works w.r.t. SQS. The batching process holds incoming anchor requests “in-flight” until either a batch is full or the batch linger timeout has run out. This means that there can be up to a full batch’s worth of requests “in-flight” at any time.

SQS has a limit (120K) on the maximum number of messages that can be “in-flight” at any point in time. This means that for a deeper Merkle tree we must either:

- Update the algorithm to use a different mechanism for batching.

- Limit the size of the batch (and thus of the Merkle tree) to a number less than 120K.

- Add an extra layer of batching, i.e. for a tree depth of 2^20, the first layer batches a maximum of 2^16 requests at a time, then a second layer groups 16 such batches into a larger 2^20 sized batch.

Recommendation

The following list is the order of operations we would recommend (assuming the desired Merkle tree depth is 2^20).

Some of these steps can happen in parallel, and some are optional or can be done later.

- Setup a new CAS Cluster with 9 API instances.

- Remove IPFS.

- (Optional) Increase number of workers to 4.

- Increase Merkle tree depth to 2^16.

-

Update CAS Scheduler to support batches up to 2^20.

-

Increase Merkle tree depth to 2^20