While Ceramic serves as an excellent infrastructure for public data, there seems to be a strong desire to also use it for more sensitive data. There are usually two main topics that arise in conversation about sensitive data, the ability to delete data, and the ability to only have certain actors read the data. This forum post is an attempt at describing possible future directions for the Ceramic protocol when it comes to storing sensitive data.

Data deletion

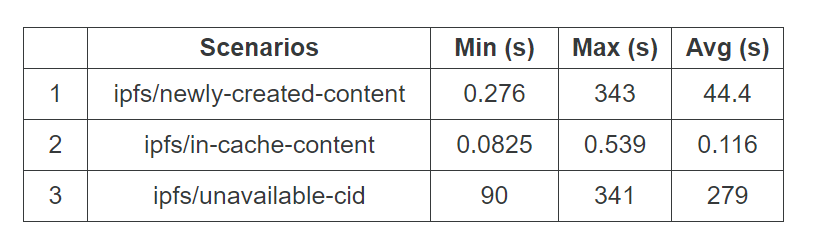

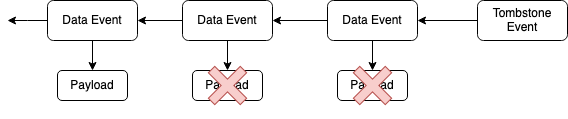

One of the core principles of Ceramic event streams is that they should be independently verifiable without trusting any third party. This makes deletion of data hard because deleting an event in an event log would break the integrity of this event log. There is however a small modification to the event log data structure that could fix this problem. Currently the data structure of an event log looks like depicted in Figure 1. There we can see the payload being inside of the same logical object as the Data Event.

Figure 1: the current data structure of an event log. Here an arrow represents a hash pointer, e.g. Data Events to the right contain the hash of the previous Data Event.

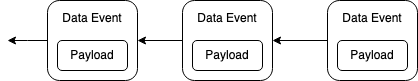

It would be fairly easy to separate the payload from the Data Event envelope by having the envelope simply contain the hash (e.g. CID) of the payload.

Figure 2: separating payloads from the Data Event envelope. Now the envelope only contains the hash of the payload.

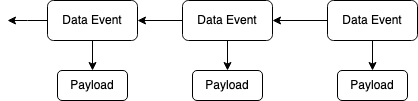

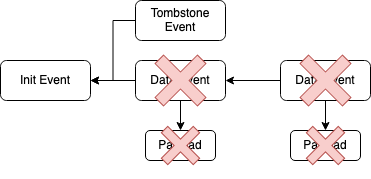

This simple change would allow for the deletion of payload data, while keeping the integrity of the event log intact. We could introduce a new type of event called “Tombstone Event”, see Figure 3, that specifies specific payloads that should be deleted.

Figure 3: a tombstone event that specifies specific payloads to be deleted.

A more drastic type of Tombstone Event that deletes all events in a stream could also be added. It would need to only include the Init Event hash pointer and signify that all other events for this given stream should be deleted.

Figure 4: A tombstone event that revokes all data in a stream.

Content encryption

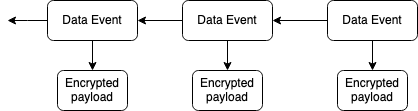

So far we’ve only talked about public data and the deletion of this data. Another step to make Ceramic more private is to encrypt the payload of events. Some applications built on Ceramic already do this, however separating the payload as described above give developers more flexibility.

Figure 5: Encrypted payloads

Worth noting is that different methods of encryption give different amount of security. For example using a symmetric key together with a strong cipher for encrypting can provide security against an attacker with a quantum computer, but with the drawback of difficulty of doing a key exchange between multiple parties.

Using asymmetric cryptography makes it easier to share keys between multiple parties, but most asymmetric cryptography used in production today will break with the introduction of quantum computers, so not a good alternative if that’s included in your threat model.

Access control

Currently, all data in Ceramic is publicly accessible. There are some great advantages to this, namely verifiability and decentralization. However, in some cases you might not want to expose this data to the public. It could be possible to introduce a change to the Ceramic protocol in the future that allows a stream creator to specify that only certain DIDs are allowed to read the data. This could be coupled with the separated payload data as outlined above to retain public verifiability of metadata, while keeping payload data private. Note however that the stream creator would have to specifically select a node which they trust to store this data and correctly only give access authorized DIDs.