I’ve previously discussed private data on ceramic through the lens of data deletion. At the end of that post I briefly mention access control at the very end. The idea would be to add functionality to Ceramic nodes that adds physical access control. This means that the user will have control over how their data is replicated in the network and by whom. Only accounts authorized by the original author of the data would be allowed to read and sync that data on the network. Physical access control of data is actually one of the things that Ceramic theoretically could solve extremely well. The 3Box Labs team is currently exploring how to best add this functionality by building a proof of concept of the feature directly in rust-ceramic. This forum post explains how the PoC will work.

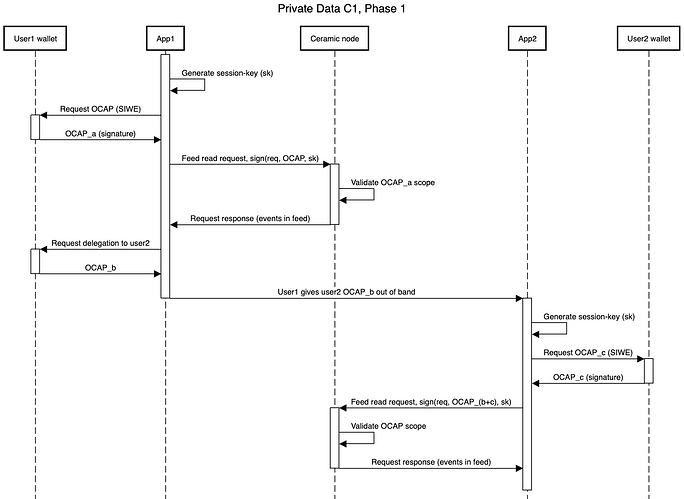

Phase 1: API read access control

In phase 1 the main focus is adding read access control to the /feed endpoint on rust-ceramic nodes. This means that a ceramic node would filter the feed of events that are read out from the node based on which permissions the user has. The user needs to sign every request it makes to this API with a key that has been given an object-capability (OCAP) signed by the users account. The figure below demonstrates the flow for user1 to read their own data, and how user1 can delegate read permissions to user2.

- User1 accesses the playground app, and can request read access by delegating to a session key that lives locally, creating

OCAP_a - The session key then signs every request to the

/feedendpoint, which will return data based on the permissions in theOCAP_a - User1 can in the app delegate read permissions to user2. This prompts the user to sign another message that explicitly delegates this permission, creating

OCAP_b - The

OCAP_bis sent to user2 out of band - User2 now goes through the same procedure as user1, but with the addition of

OCAP_b.

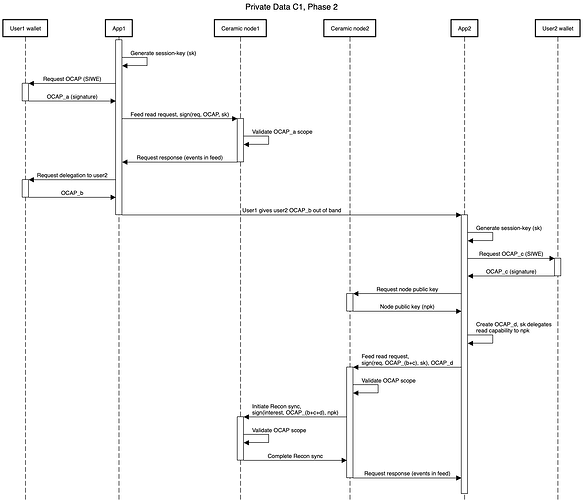

Phase 2: Network level read access control

In phase 2 of the PoC the capabilities can also be used by nodes to synchronize data over the network. From a UX perspective this looks and feels very similar to what happened in the phase 1 prototype. However, under the hood another delegation step needs to take place that further delegates the capability to the nodes keypair.

In the figure above the main difference is that each user is using a separate node. When user2 sends their request to their node, a new OCAP_d is created by the session key that delegates the permissions to the nodes public key. The node can then use this capability chain to register a new interest in Recon. The node of user1 can validate this full capability chain and thus know that they can safely send the data over to the node of user 2.

Potential improvement for future flexibility

As suggested by Danny Browning, a potential improvement to the read flow could be achieved by adding a level of indirection between the session key and the node. This has the added benefits of supporting use cases such as service restrictions, group assignment, or revocation. For cases where there is large amounts of horizontal sharding, this improvement could be moved to an access control management service, and then trusted by your ceramic node.

In this flow, the user generates an ocap token, then that ocap token is attenuated with additional restrictions from the service, which is determined from the user, or by attaching the user to a group, then determining permissions for that group.

The final benefit of this approach is to provide an integration with a backing blockchain or verifiable offchain storage for additional information to be shared between nodes, such as the aforementioned group assignments.